AI Meets Blockchain: Revolutionizing Data Trust, Security, and Monetization through Tokenization and Smart Contracts

Dear Reader,

Over the past several months, I’ve been on a personal journey to achieve my Blackbelt in Organizational AI implementation. Along the way, I’ve integrated my understanding of Blockchain, Crypto, Digital Assets, Fintech, and the real-world challenge of data integrity and monetization. The result? A solution that is not just a step forward but a leap into the future of data management.

As someone deeply immersed in technology, I want to be transparent. I use AI tools like ChatGPT, Claude, and CoPilot to assist me in refining my writing process. While these tools are invaluable for sharpening the delivery of my content, the ideas, concepts, and technical constructs are entirely my own. Human creativity and innovation drive the thoughtful use of these technologies, and that’s where meaningful innovation comes from.

After months of refining complex ideas, I’ve attempted to craft a digestible and informative document. AI has been an assistant in this journey, but the hard work, intellectual effort, and vision remain mine.

Please note the following is somewhat technical. I do my best to try and raise this to a level where all readers can understand the subject. Should you want a more in-depth read on this, please reach out and request a copy of the more technical Indeation document

AI and Blockchain: A New Era for Data Integrity, Security, and Monetization

Data is the new oil in today’s digital landscape—it powers businesses, drives innovation, and fuels decision-making across industries. However, with the explosion of data comes a critical challenge: How do we ensure integrity, security, and monetization while maintaining privacy and compliance?

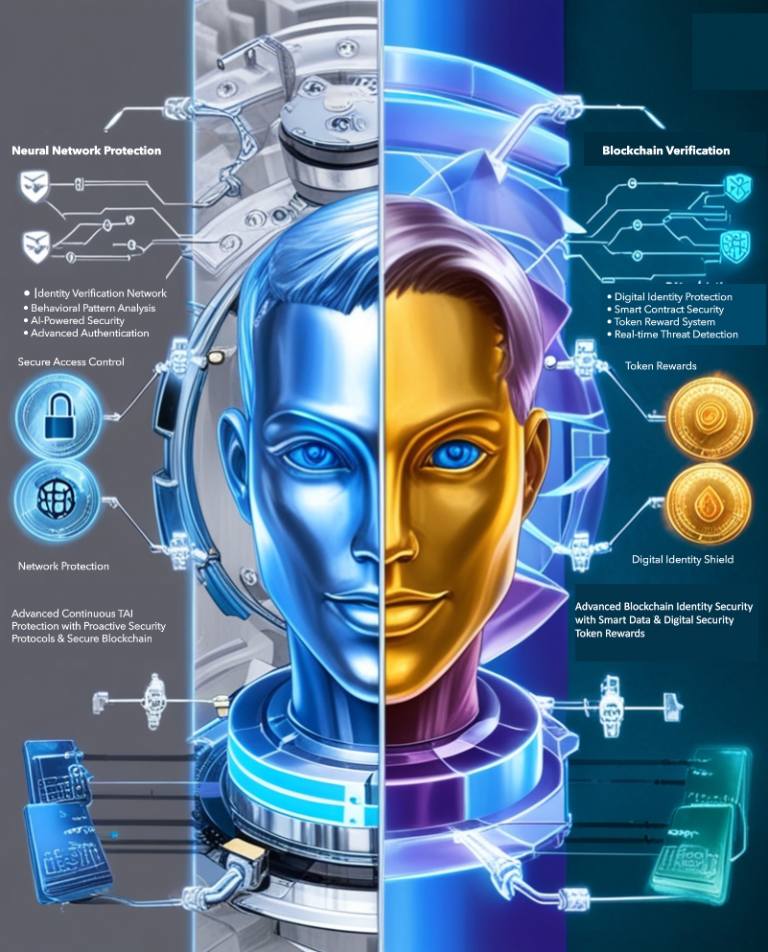

By combining the power of Artificial Intelligence (AI), Blockchain, smart contracts, and tokenized data, I’ve developed a conceptual framework that addresses these challenges head-on. This framework offers a one approach to managing data across its entire lifecycle—from ingestion to monetization. It paves the way for a more secure, efficient, and accessible data-driven economy that has the potential to reshape industries.

Let me share what I’ve been working on and let me know what you think in the comments section.

The Foundation: AI, Blockchain, and Data Tokenization

At the core of my AI and Blockchain-based framework are two key concepts: data integrity and monetization. Blockchain technology serves as the foundation, providing an immutable ledger that guarantees every piece of data is accurate, secure, and traceable. This ledger ensures that data can be safely ingested, verified, and eventually tokenized.

But this isn’t just about storing data securely—it’s about unlocking its true potential.

Through tokenization, datasets are transformed into digital assets that can be owned, traded, and monetized. Each dataset is represented by either a non-fungible token (NFT) or another type of digital token:

- NFTs (Non-Fungible Tokens) are unique digital representations that cannot be replaced or exchanged on a one-to-one basis. For example, an NFT might represent an exclusive dataset containing proprietary research, or it could represent the entire historical record of a real estate property, offering buyers and sellers a comprehensive view of the asset’s history.

- Digital tokens, on the other hand, are more flexible and interchangeable. For instance, a dataset on consumer behavior could be divided into tokens, each representing partial ownership or access to a portion of the data, allowing multiple stakeholders to co-own or trade it. This enables fractional ownership and collaborative investment in valuable datasets.

These tokens allow data to be bought, sold, or licensed in real-time through smart contracts. A smart contract is a self-executing program stored on the blockchain that automatically enforces the terms of an agreement once certain conditions are met. It acts like a digital contract that doesn’t need a middleman—ensuring the transaction is transparent, secure, and automatic without requiring manual oversight.

This system of tokenization transforms data from a static asset into a dynamic, tradeable resource, unlocking new economic opportunities across industries, allowing data owners and users to engage in a seamless exchange of value.

From Validation to Tokenization: Creating Unique Identifiable Value

Once data has been validated through a rigorous AI-assisted process, it’s ready to be tokenized. But what exactly does tokenizing data mean?

Tokenization converts validated data into a unique digital asset, represented as a token on the blockchain. Each token carries a unique identifier that ties it directly to a specific dataset, ensuring it is immutable, traceable, and secure. Essentially, these tokens act as certificates of ownership for the dataset, allowing the data to be bought, sold, or licensed with full transparency.

Here, smart contracts come into play. A smart contract is a self-executing agreement coded directly onto the blockchain, automatically enforcing the terms of any transaction involving the tokenized data. In this framework, smart contracts govern the entire lifecycle of a data asset. They:

- Automatically record the token’s creation, linking the dataset immutably to its blockchain entry, ensuring data integrity.

- Enforce ownership, access rights, usage terms, and compliance (such as GDPR or HIPAA requirements, if applicable).

- Govern the transfer of assets, ensuring compliance with pre-set rules, such as licensing fees, access periods, or fractional ownership models.

- Provide complete transparency and eliminate the need for intermediaries, enabling direct transactions between data owners and users.

This automated process ensures that data owners retain control and ownership of their data while users can interact with it seamlessly and securely. With smart contracts in place, the journey from validation to tokenization is fully automated, removing the traditional friction and risks associated with data transactions while unlocking the ability to monetize data more efficiently.

Unleashing Data’s Power in the AI-Driven World

Now, let’s look at what happens once the data has been tokenized; it doesn’t just exist as a static asset on the blockchain—it enters the broader AI Universe, where it becomes a valuable resource for end-users to tap into. Large Language Models (LLMs) like GPT-4, Claude, and other AI systems can now access these tokenized datasets to help users solve specific problems or queries.

This is where the real value chain begins to emerge, allowing users to interact with tokenized data and extract actionable insights, all while ensuring the data owners are compensated at every interaction. The AI engine acts as the intermediary, pulling insights from the data without exposing the raw dataset itself. Every time a user interacts with the dataset, a microtransaction is initiated through the smart contract, automatically compensating the data owner.

Here’s how it works in practice:

- Example 1: Business Analytics: A company might use AI to analyze a tokenized dataset containing customer behavior data. The AI system can filter the data by region, customer age, or buying preferences and return targeted insights to help the company optimize its marketing strategy. In this scenario, the data owner is compensated for each query the AI runs on the dataset.

- Example 2: Market Trends: A business may ask the AI to analyze market trends based on the tokenized dataset. The AI can identify emerging consumer preferences or detect shifts in the market. Every time a new insight is generated from the data, the AI creates a monetization event, ensuring the data owner is paid for the dataset’s contribution to these insights.

- Example 3: Personalized Learning for Students or Creators: A student or creative professional, such as a musician or artist, might use AI to interact with tokenized datasets for learning or inspiration. For instance, a student could query AI-powered educational platforms to analyze a tokenized archive of historical artworks, allowing them to explore artistic movements or specific techniques. Similarly, a musician might use AI to study tokenized music data, identifying patterns in different genres to inspire new compositions. Each interaction with the dataset—whether it’s querying the styles of Renaissance painters or analyzing jazz chord progressions—creates a monetization event, ensuring that the data owners, such as art collectors or music archives, are compensated for every use of their intellectual property

The traceability of these interactions, combined with the automated payments powered by smart contracts, ensures that data is continuously monetized as it’s accessed and used—whether through a one-time query or a subscription-based model. AI serves as the gateway that enables users to engage with the data without needing direct access to its raw form, providing both security and transparency.

From Tokenization to Monetization: Unlocking the Value of Data

Once the data is tokenized and integrated into the AI ecosystem, it becomes a true revenue-generating asset. But how does the monetization process unfold?

When an individual, company, or AI engine seeks access to the tokenized data, a smart contract is triggered. These smart contracts automatically execute the terms of the agreement—whether it’s a one-time payment, an ongoing subscription, fractional ownership, or ongoing usage—without any need for manual intervention. Here are a few detailed examples to illustrate how it works:

- One-time payment: A researcher seeking access to a specific dataset for a one-off project pays a single fee, and the smart contract grants access while recording the transaction on the blockchain. AI tools allow the researcher to query the dataset for specific information, returning insights without them needing to access raw data. This ensures secure use of the dataset while providing compensation to the data owner.

- Subscription model: A company needing continuous access to a dataset for long-term analysis pays for a subscription. The smart contract ensures regular payments to the data owner and maintains access for the company. AI systems allow the company to run automated queries and analytics on the dataset, continuously extracting value, while the smart contract tracks usage and compensation.

- Fractional ownership: In scenarios where multiple parties want to co-own a dataset, the smart contract manages fractional ownership, ensuring that each stakeholder’s share is fairly compensated and governed by pre-set rules. AI models facilitate the filtering and targeted use of the dataset by multiple stakeholders based on their needs, while ensuring proper compensation for each stakeholder.

- Ongoing usage for creative industries: In fields such as art, music, or digital content, the tokenized asset (e.g., a song, artwork, or document) can be licensed for continuous use. AI engines can recommend these assets based on user preferences, ensuring that the original creators receive compensation each time the asset is used or accessed through personalized AI-driven searches.

What makes this process even more efficient is the use of stablecoins like Circle’s USDC. Stablecoins provide the necessary stability and liquidity for real-time compensation. Each time the data is accessed or used, the data owner is compensated almost instantly, without the delays or volatility often associated with cryptocurrency payments.

This framework transforms what was once a static asset (data stored in a database) into a dynamic, revenue-generating resource. Every interaction with the tokenized data—from an AI engine accessing it to a business analyst running queries—is monetized through microtransactions, creating a continuous income stream for data owners.

On-and-Off Ramping: Bridging the Traditional and Digital Worlds

One of the most powerful features of this framework is the seamless integration of on-and-off ramping between fiat currencies (e.g., USD) and digital assets like tokenized data. On-and-off ramping allows users to easily move between traditional payment methods and the digital economy.

This is where payment processors like Visa, PayPal, WhatsApp Pay, and ACH come into play. These instruments allow users to convert fiat currency into stablecoins (on-ramp) and vice versa (off-ramp), ensuring that participants can engage with digital assets even if they are unfamiliar with cryptocurrency.

For example:

- Visa and PayPal enable users to purchase data tokens using credit cards or bank accounts. Stablecoins like USDC are credited to the user’s digital wallet, allowing them to participate in the tokenized data economy.

- WhatsApp Pay and ACH facilitate the conversion of digital assets back into fiat currency, allowing businesses or individuals to withdraw their earnings into traditional bank accounts.

This seamless conversion between fiat currencies and stablecoins means that even those less familiar with blockchain technology can easily participate, eliminating friction and lowering the barriers to entry for businesses, governments, and individuals to access the digital economy.

Use Cases: Real-World Applications of Tokenized Data and AI Integration

1. Home Buying: Tokenizing Real Estate for Transparency and Efficiency

The real estate market has long been plagued by inefficiencies, slow processes, and lack of transparency. This framework changes that by tokenizing property data—creating a digital twin of each property. This twin includes legal documents, maintenance records, and the entire property transaction history.

When a property is tokenized, AI interfaces allow users to query property details, market trends, and transaction history. A potential buyer might ask the AI for insights into the home’s value over time or explore the development plans in the surrounding neighborhood. AI serves as the gateway to the tokenized data, enabling users to quickly access the most relevant details for their needs. The AI retrieves data directly from the blockchain, ensuring transparency and trust in the process.

Once the buyer decides to move forward, smart contracts handle everything from title transfer, escrow, to mortgage approval, automating what would traditionally be a cumbersome, paperwork-heavy process. AI assists in tracking real-time updates and ensuring every step adheres to compliance regulations. Payments are processed using stablecoins like USDC, and once the transaction is completed, the buyer’s ownership is recorded immutably on the blockchain.

In this scenario, AI continuously monitors the property data for any updates (e.g., repairs or new construction) to enhance its future market value. By doing so, it ensures both transparency and long-term value realization for both buyers and sellers, while also monetizing each query or interaction through microtransactions.

2. Government Procurement: Revolutionizing Transparency and Accountability

Government procurement is notoriously complex, often leading to inefficiencies and potential corruption. By tokenizing government contracts, the procurement process becomes fully transparent and AI-enhanced, ensuring better decision-making.

Let’s say a city is building a new public park. The project’s budget, timelines, contractor details, and milestones are tokenized onto the blockchain. Smart contracts automatically release payments as milestones are completed, reducing delays and human errors. AI algorithms analyze the procurement data, identifying potential cost-saving measures and flagging any irregularities in spending.

Users (such as contractors or businesses) can interact with the AI-powered procurement system, asking specific questions about ongoing projects, costs, or upcoming tenders. For example, a contractor could ask the AI, “What projects are open for bidding in the next quarter?” AI provides tailored answers based on the tokenized contract data, while every interaction is monetized in the background, creating a stream of revenue for the data providers.

This real-time visibility not only ensures that citizens can track the progress of the project but also allows private companies to subscribe to government procurement insights. By accessing anonymized historical procurement data, businesses can improve their bidding strategies for future contracts.

The transparency provided by tokenization, combined with AI’s ability to continuously evaluate performance, ensures that taxpayer dollars are spent efficiently. This approach creates new revenue opportunities for public institutions through subscription models for businesses seeking procurement data insights.

3. Gene Sequencing: Privacy, Compensation, and Innovation

In healthcare, gene sequencing is transforming the way we approach personalized medicine. However, privacy and security concerns are critical. This framework offers a solution by tokenizing genetic data and turning individual genomic sequences into secure, traceable digital assets.

Patients can interact with AI interfaces that allow them to control and monitor how their tokenized genetic data is used. For instance, a patient might ask the AI, “Has my genetic data been used in any recent studies?” The AI pulls up a history of how their data was accessed, who accessed it, and what type of research it contributed to, ensuring full transparency.

Pharmaceutical companies, researchers, and academic institutions can then access this anonymized, tokenized genetic data through smart contracts for drug development and research purposes. Every time a patient’s data is used in a study, they are compensated in real-time via stablecoins, ensuring they are fairly rewarded for contributing to scientific advancements.

Compensation for Secondary Use in New Research

In addition to accessing tokenized genetic data for immediate research, researchers may use the original data to develop new findings or even create new gene therapies. When this happens, AI tracks how the data is used and flags any instances where new derivative data or discoveries are made based on the original genetic information.

In these cases, a secondary smart contract is triggered, providing the original data owners with royalty payments for their contribution. This ensures that continuous compensation flows to the data owners as their genetic information enables future breakthroughs in research and healthcare.

This approach guarantees perpetual value realization, where the original contributors are rewarded for secondary use cases and the long-term impacts of their genetic data.

The Future of Data-Driven Economies

The fusion of AI and Blockchain is one method of revolutionizing data valuation, security, and monetization. With the integration of tokenization, smart contracts, and on-and-off ramping capabilities, businesses, governments, and individuals now have the tools to not only protect their data but also unlock its full potential as a revenue-generating asset.

The future of the digital economy lies in creating secure, transparent systems where data can be stored, accessed, traced, and monetized.

My goal at MPowerIQ is to stimulate creative thought and, through my realm of personal ideation, present a conceptual framework for a new data economy where trust, transparency, and monetization are seamlessly integrated.

Imagine a world where:

- Every property has a digital twin, streamlining real estate transactions

- Government procurement is as transparent as it is efficient

- Your genes are not only protected but also contribute to groundbreaking research, and you are compensated for it

In this world, your data is no longer just a record—it’s a resource for growth, innovation, and continuous compensation. is no longer just a record—it’s a resource for growth, innovation, and continuous compensation.

Questions for you:

- What role will your data play in the evolving digital economy, and how can tokenization unlock new value for your organization?

- How can AI and blockchain transform your industry’s data security, transparency, and monetization approach?

Let me know in the comments below.

Join Me in Shaping the Future

At MPowerIQ, I believe in the power of innovation to create lasting change. If you’re ready to explore new ideas, discuss potential collaborations, or dive deeper into what I do, let’s connect.

I’m eager to hear your thoughts and start building the future together!

Contact Me:

Email: info@mpoweriq.com

Set a Time: Schedule a meeting to explore how we can collaborate

Interested in the full Ideation document? Please complete the request form.

Let’s partner to turn vision into reality.to turn vision into reality.